# Adversarial Texture Optimization

Adversarial Texture Optimization from RGB-D Scans.

🌏 Source

🔬 Downloadable at: https://arxiv.org/abs/1911.02466. CVPR 2020.

Introduces a new method to construct realistic color textures in RGB-D surface reconstruction problems. Specifically, this paper proposes an approach to produce photorealistic textures for approximate surfaces, even from misaligned images, by learning an objective function that is robust to these errors.

# Main contributions

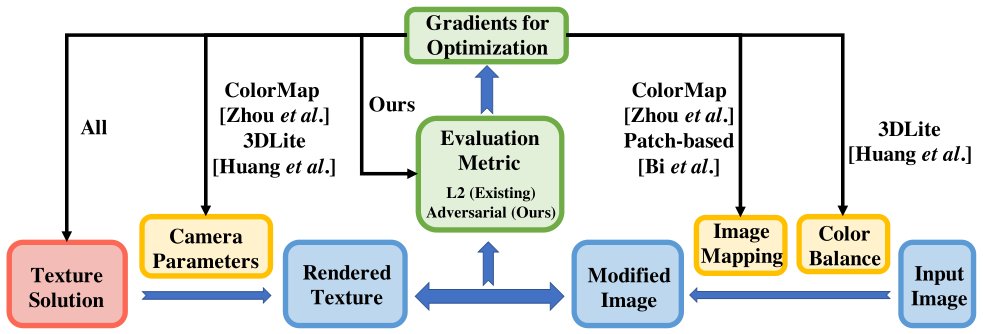

Key idea: to account for misalignments in a learned objective function of the texture optimization.

Benefits:

- Rather that using a traditional object function, like L1 or L2, here we learn a new objective function (adversarial loss) that is robust to the types of misalignment present in the input data.

- This novel approach eliminates the need for hand-crafted parametric models for fixing the camera parameters, image mapping, or color balance and replaces them all with a learned evaluation metric.

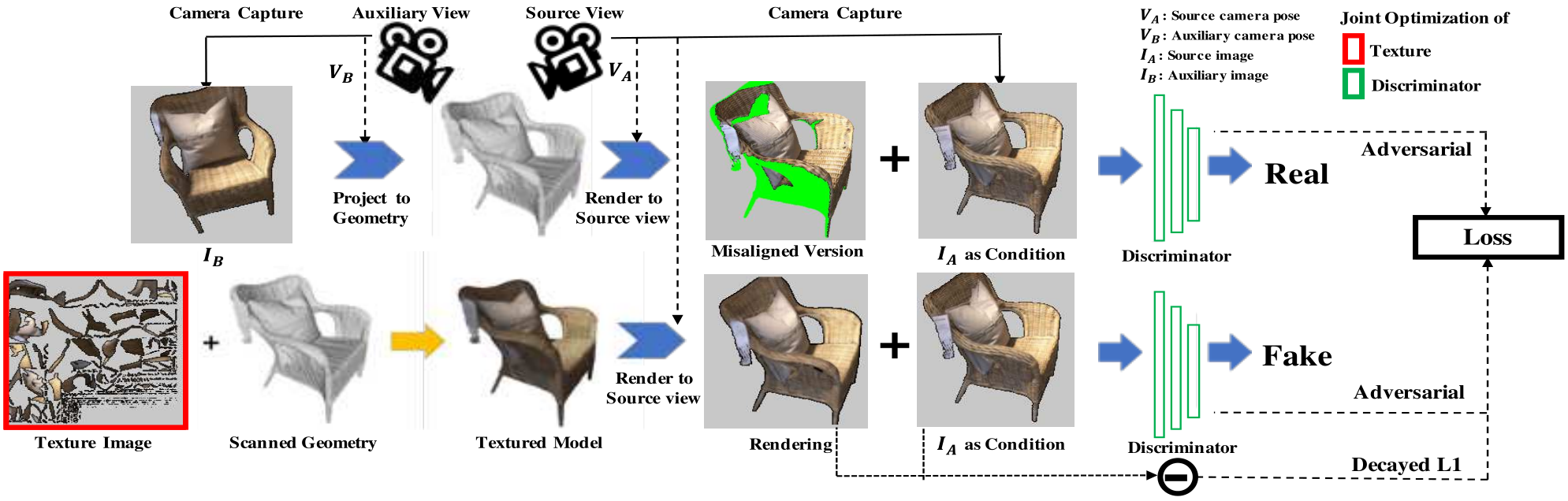

The key idea of this approach is to learn a patch-based conditional discriminator which guides the texture optimization to be tolerant to misalignments. Our discriminator takes a synthesized view and a real image, and evaluates whether the synthesized one is realistic, under a broadened definition of realism.

# Methods

From an input RGB-D scan, we optimize for both its texture image and a learned texture objective function characterized by a discriminator network.

# Misalignment-Tolerant Metric

For each optimization iteration, we randomly select two input images,

# Texture Optimization

To retrieve a texture, we jointly optimize the texture and the misalignment-tolerant metric. Our view-conditioned adversarial loss function:

The adversarial loss above is difficult to train alone, we add an L1 loss to the texture optimization to provide initial guidance:

And the final objective texture solution:

# Differentiable Rendering and Projection

To enable the optimization of the RGB texture of a 3D model, we leverage a differentiable rendering to generate synthesized 'fake' views.

- Pre-compute a view-to-texture mapping using

pyRender. - Implement the rendering with a differentiable bilinear sampling.

# Evaluation metrics

| Metric | Notes |

|---|---|

| Nearest patch loss | For each pixel |

| Perceptual metric | To evaluate perceptual quality. |

| Difference between generated textures and ground truth | Measure this according to sharpness and the average intensity of image gradients, in order to evaluate how robust the generated textures are to blurring artifacts without introducing noise artifacts. |

We cannot use standard image quality metrics such as MSE, PSNR or SSIM - image-quality-assessment, as they assume perfect alignment between target and the ground truth.

# Results

# Synthetic 3D Examples

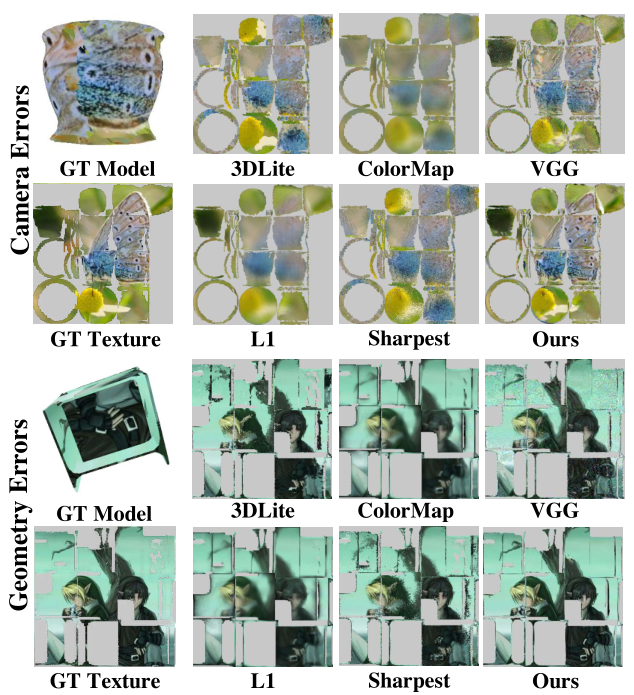

Texture generation in case of high camera or geometry errors.

# Increasing the camera/geometry error gradually

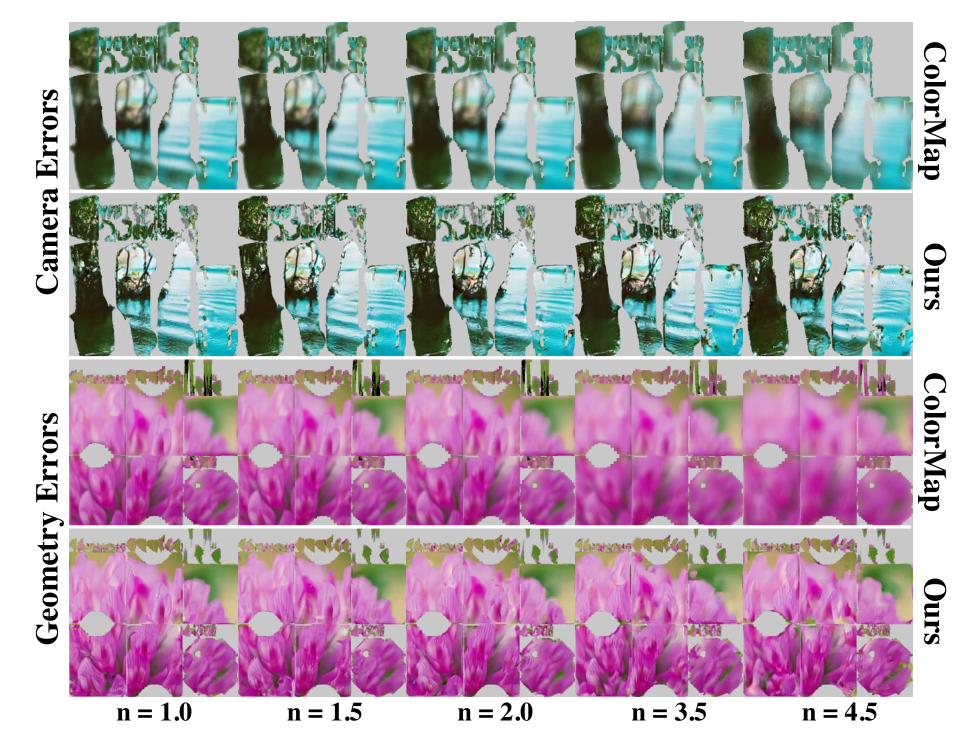

Texture generation under increasing camera or geometry errors.

# Referred in

- papers

- | Paper Title | Publication | | mu-gan | CVPR 2020 | | adversarial-texture-optimization | CVPR 2020 |